CNN Style Transfer论文复现

# NeuralStyle

My own implementation of CVPR 2016 paper: Image Style Transfer Using Convolutional Neural Networks. This work is, I think, simple but elegant (I mean the paper, not my implementation) with good interpretability.

- CVPR 2016 OpenAccess Link is here: CVPR 2016 open access

- Personal understanding of this paper [Chinese]: Blog of Enigmatisms/CNN Style Transfer论文复现

2021.11.15 complement: I have no intention to analyze and explain this paper, because I think it's simple, and I have a deep impression of this, therefore there is no point recording anything on the blog. Original Github Repo: Github🔗: Enigmatisms/NeuralStyle. This post is exactly the README.md of the repo.

To run the code

Make sure to have Pytorch / Tensorboard on your device, CUDA is available too yet I failed to use it (GPU memory not enough, yet API is good to go). I am currently using Pytorch 1.7.0 + CU101.

On Init, it might require you to download pretrained VGG-19 network, which requires network connection.

Tree - Working Directory

- folder

content: Where I keep content images. - folder

imgs: To which the output goes. - folder

style:lossTerm.py: Style loss and Content loss are implemented here.precompute.py: VGG-19 utilization, style and content extractors.transfer.py: executable script.

A Little Help

Always run transfer.py in folder style/,

using python ./transfer.py -h, you'll get:

1 | usage: transfer.py [-h] [--alpha ALPHA] [--epoches EPOCHES] |

Requirements

- Run:

1 | python3 -m pip install -r requirements.py |

To find out.

Training Process

- Something strange happened. Loss exploded twice (but recovered.). Tensorboard graphs:

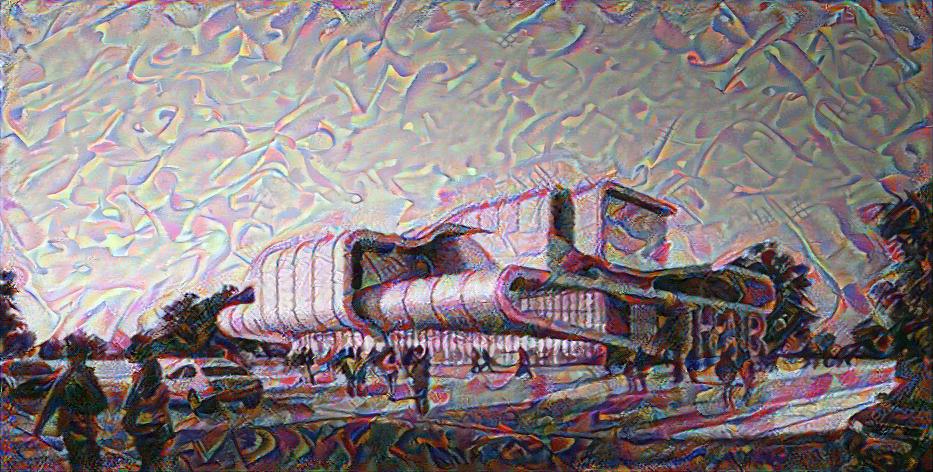

Therefore, parameter images change like this (Initialized with grayscale image).

|

|

|

|---|---|---|

|

|

|

| First few epochs | Exploded, for 2th row image | Recovered |

Results

- CPU training is tooooooo slow. Took me 2+ hours for 800 iterations. (i5-8250U 8th Gen @ 1.60Hz)

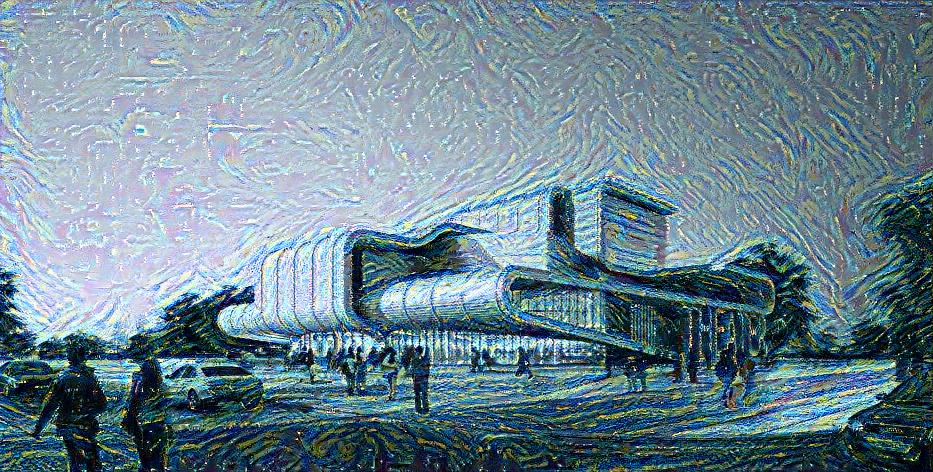

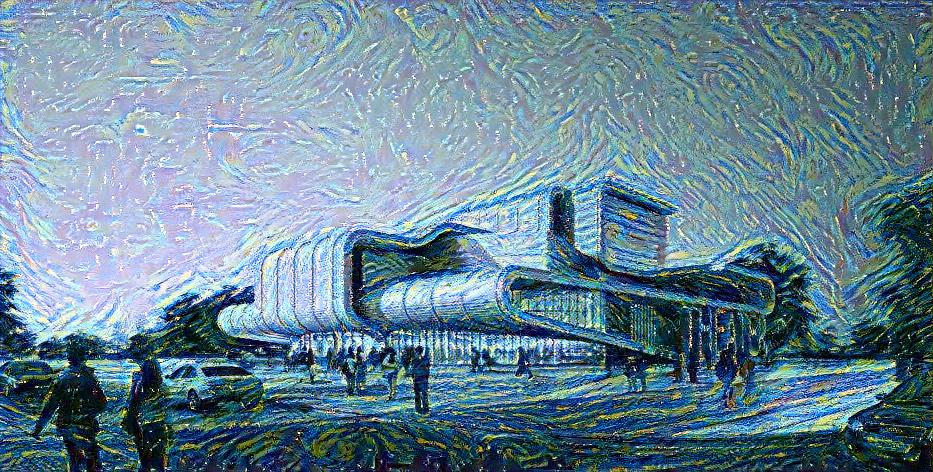

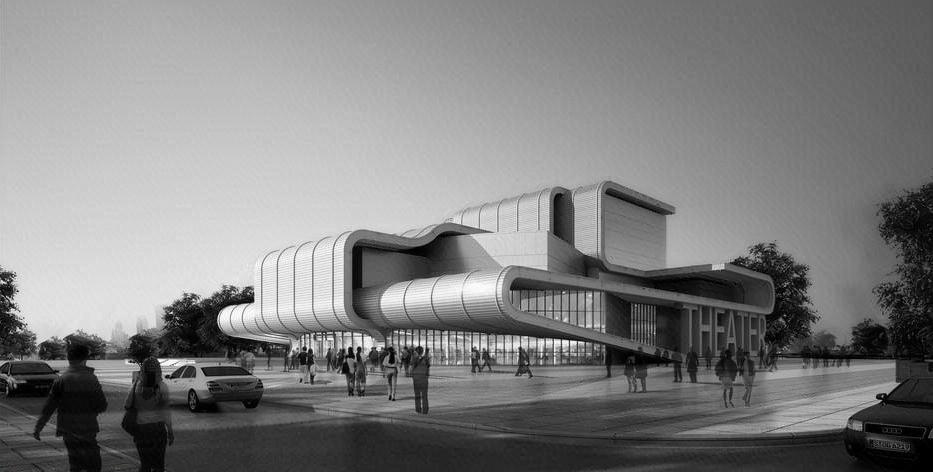

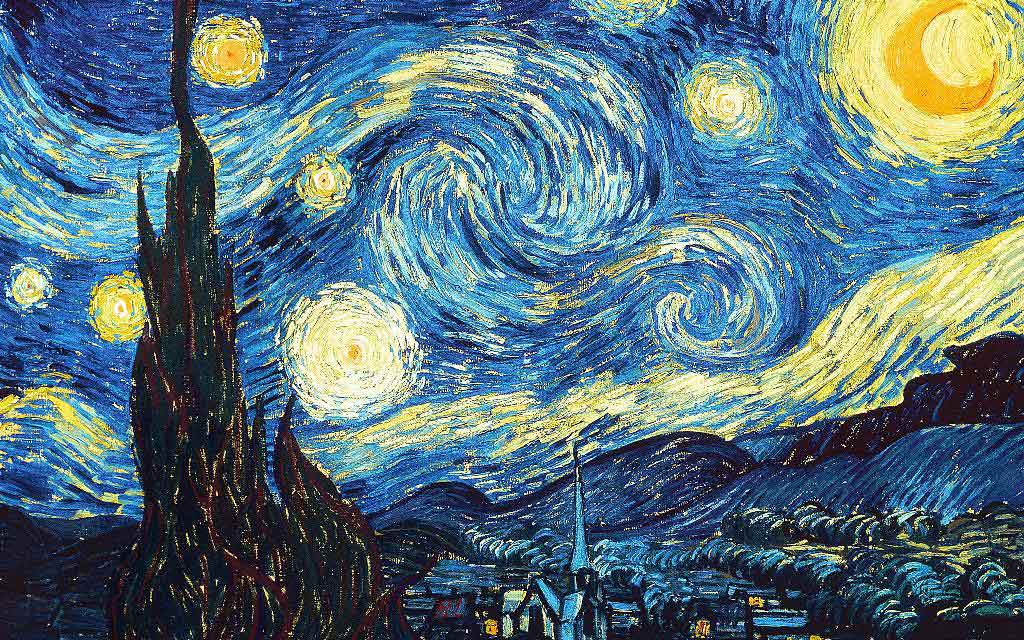

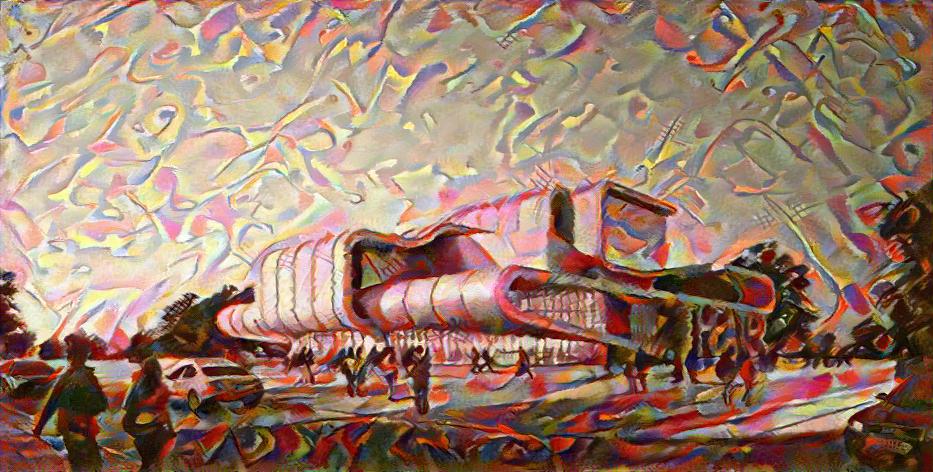

|

|

|

|---|---|---|

|

|

|

| Style | Content | Output(800 Iterations) |

- I've also done the style transfer of Van Gogh's self portrait for my dad, which is not appropriate to display, but worked.